Composition of "Visional Legend"

Yoichi Nagashima

Abstract

This is a "live" report of the composition of "Visional Legend"

with the point of view "Human Supervision and Control in

Engineering and Music" (e.g., multimedia/interactive art,

interaction between breath/music of Sho, human-media

interaction with sensor and computer music/graphics).

You can follow the "live" process of my composition with full

information/score of the work. Please enjoy !

Fig.1 : cover page of the score of "Visional Legend"

1. The Motive

As a composer and a researcher of computer music, I have

two different style of composition : "needs" oriented, and

"seeds" oriented.

This work "Visional Legend" was composed by both motivation

as a special case in my composition.

"seeds-oriented" composition

With my research and development in computer music, I have

found and developed many compositional concepts and ideas :

random, chaos, NN, GA, multi-agent multimedia, multi-layer

algorithmic composition, etc. So they became the "seeds"

of ideas in my compositions experimentally.

On the other hand, I (as an engineer) have produced many sensors

experimentally (testing new sensing devices, new technique of

noise reduction, new microprocessing system for human

performance, etc). They were also "seeds" of my research,

and were often cast as a instrument after the development.

"needs-oriented" composition

As a usual composer, my composition begins with study

of the "triggered" theme (e.g., poetry, traditional/folk instruments,

natural/strange sounds, special musical styles, etc).

On the other hand, I am requested to develop the special

sensors/instruments for musician or artists, this case is

also the "needs" oriented, but I am not a composer in

this time but an engineer and a collabolator of

the project. I cannot produce the "special instruments"

as a composer.

The Motive of the composition of "Visional Legend"

I and Tamami Tono (my friend, composer and Sho performer,

active in worldwide) have a collaboration project featuring the

bio-sensing technology in music. The Sho (Japanese traditional

instrument) player blows into a hole in the mouthpiece, which

sends the air through bamboo tubes which are similar in design

and produce a timbre similar to the pipes in a western organ.

It can produce chords as well as single notes. It is important

and interesting that the Sho player uses both directions of the

breath stream, and controls the breath pressure for expressions

in music. I have developed two styles of sensors for Sho

performance with bio-sensor technology and microelectronics,

and produced a multimedia/interactive environment for

composition/performance of computer music featuring Sho,

and composed this work.

Fig.2 : Tamami Tono plays the Sho

On the other hand, I had inspired and have been keeping one

poem called (

written by Japanese poet Shimpei Kusano) for over

20 years. As a composer, sometimes I have tried to compose with

this poem by many styles of music, but all challenges have failed

because of the deep scale of the world. Finally I have

succeeded in composing with new method : computer music.

Of course, I was deeply affected with the Sho sounds performed

by Tamami Tono, and total "Japanese" atomsphere is the main

theme of this music.

(

written by Japanese poet Shimpei Kusano) for over

20 years. As a composer, sometimes I have tried to compose with

this poem by many styles of music, but all challenges have failed

because of the deep scale of the world. Finally I have

succeeded in composing with new method : computer music.

Of course, I was deeply affected with the Sho sounds performed

by Tamami Tono, and total "Japanese" atomsphere is the main

theme of this music.

2. Musical Elements

Whole sound of this work consists of Sho sound performed

by Tamami Tono and reading speech of the poem by Junya

Sasaki (Baritone). There are two types of sources : pre-composed

CD (background part) and live signal processing of Sho sound.

The performance of this workshop is partly revised version

from the premiere version in 1998, but the background CD

part and the score for performer is just the same the premiere

version.

studio work for CD part

At first, we had a recording of Sho sounds at a studio

of Keio University SFC. Of course, we used not only "musical"

(traditional) performing style but also tried many styles of

contemporary technique of performance : for example,

staccato, accent, noise, distortion and singing. Traditional

Sho performers refuse these "vulgar" manner because

they might be excommunicated, but Tamami could challenge

the possiblity of Sho sounds, so we found many new

technique and good sound materials.

Fig.3 : sound editing in SGI Indy

The Sho sounds (single note, chords, noises, etc) were

sampled into SGI Indy workstation, edited many techniques

like "music concrete" with SGI soundeditor software,

processed by SSC Kyma signal-processing workstation

with algorithmic control by Max patches.

In my composition, I usually use these softwares for signal

processing : Kyma, Max/MSP, SuperCollider, SGI soundeditor,

and original softwares written in C language by myself

on IRIX(SGI) environments.

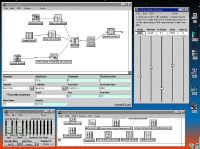

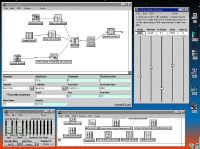

Fig.4 : signal processing patch in Kyma

The Baritone voices were recorded at a studio in Tokyo, and

also sampled into SGI Indy workstation, edited many techniques

like Sho sounds. In my composition I attach importance to

"singing/speaking voice" because I have composed over 100

choral music, but in this work I treat simply pitch-shift effect

on the reading voices.

Fig.5 : algorithm sample in Max

Recorded sounds were divided into many parts, then trimmed,

re-formed with percussive envelope, pitch shifted, reversed,

re-triggered with randomly-algorithmic or programmatic

by Unix shell scripts. Finally all sound materials were

algorithmic constructed (composed) and sampled into

single AIFF file which converted to the background CD.

Fig.6 : algorithm sample in Unix shell

System and Score

This work is not only a live computer music but also a multimedia art,

so the system consists of (1) background CD part, (2) live signal

processing with Sho sounds via microphone, (3) live graphics part.

The premiere version (1998) was construted with 3 Video

players, 3 CCD cameras and original MIDI video switcher which

exchanged live visual sources. This version (2001) has another

Macintosh computer which runs "Image/ine" software, and

acts real-time image processing with MIDI information from Max

algorithm.

Fig.7 : system blockdiagram of "Visional Legend"

The live Sho performance part is fully with improvisation

of Tamami Tono, so she uses the score only as "cue-sign"

of the performance.

Fig.8 : score of "Visional Legend"

live processing with Kyma

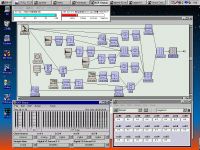

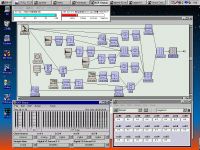

Fig.9 shows the Kyma patch for "Visional Legend" in a

moment of the composition. Live Sho sound is real-time

sampled and signal-processed in these modules.

Many parameters in signal processing are assigned

to many MIDI parameters, and real-time controlled

from Max.

Because this work is using pre-composed CD part,

this live processing by Kyma is very simple and

compact compared

with other my works (live processing only).

Fig.9 : Kyma patch for "Visional Legend"

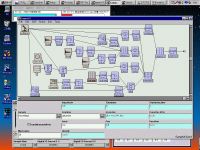

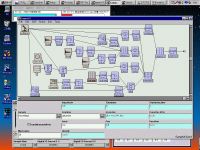

Fig.10 shows one sample of signal processing block

which acts as "real-time granular sampling" effects.

In this patch, there are 29 grains (smoothly-enveloped,

live-sampled sound elements) and rendomly re-generated

with [GrainDur] [GrainDurJitter] [Density] [PanJitter]

parameters via MIDI "control change" messages.

Fig.10 : "granular sampling" sample in Kyma

3. Graphical Elements

I have been creating many works of multi-media art,

but in almost cases I have collaborators in creating

graphical part of the work, because I am a composer.

But the first version of "Visional Legend" (1998) was composed

only by myself including the graphical part of the work (3 video

images and slide-show CG), it was a rare case.

In the newest version of "Visional Legend" (2001), I selected

2 collaborators, Misaki Kato and Masumi Ohyama,

to create the graphical part of this work originally.

visual source

At first step, I showed the background CD part and the

poem to

the collaborators,

and we had a discussion of the image and atomosphere.

Then, we go on a small trip to one temple, and took

photos and videos of JIZOs etc.

The captured and processed images from them are used

as visual source of the graphical part os this work.

to

the collaborators,

and we had a discussion of the image and atomosphere.

Then, we go on a small trip to one temple, and took

photos and videos of JIZOs etc.

The captured and processed images from them are used

as visual source of the graphical part os this work.

Fig.11 : many many JIZOs

live video switching

Fig.12 shows the system block diargam of the first

version of "Visional Legend" (1998) .

In this system, I used original "MIDI video switcher" for

live control of pre-created graphic contents and live

video images from 3 CCD cameras. This was the "analog"

processing style of the graphcal part.

Fig.12 : system of old "Visional Legend" (1998)

Image/ine, QT movie, Firewire

The newest version of "Visional Legend" (2001) has evolved

to "digital" processing not only in musical part but also

in graphical part of the work completely.

I choosed one software called "Image/ine" with Macintosh.

Fig.13 : screen shot of Image/ine

We had also recorded the QT movies of Tamami Tono

performing Sho, and these movies are used as elements

of the graphical part of the work.

I am challenging not only using QT movie but also live

image of CCD camera via Firewire (IEEE1394, iLink)

with Image/ine, so there remains very little possiblity

of using live CCD information in the performance

in the Kassel concert 2001.

Fig.14 : QT movie of Tamami's performance

4. Interactive Elements

In my composition, I prefer to choose "live" computer music

rather than "fixed" (sequencer = playback only) style.

Thus, there must be interfaces between human performer and

live computer system, like "instruments" in traditional music.

The "interface" is very important in "interactive-art" like music,

and I have been challenging to research/experiment/develop

new interfaces with sensor technology and microelectronics.

bi-directional breath sensing

Normally the Sho player must keep sitting calmly,

so I cannot use popular interfaces like foot pedals, foot

volumes and optical beam sensing the movements of arms.

On the other hands, the breath stream of each bamboo tube

is very critical, and it is very difficult to detect the value of

the bi-directional pressures for each bamboo pipe.

I found that normal Sho uses 15 bamboos with reed but 2

bamboos are used only for decoration, not used for sound generation.

So I and Tamami replaced one bamboo with the "sensing pipe"

which connected a small air pressure sensor module. This sensor

detects the bi-directional air pressure value of the "air room" of

the bottom of the Sho.

Fig.15 shows the pressure sensor and the Sho.

Fig.15 : Sho with pressure sensor

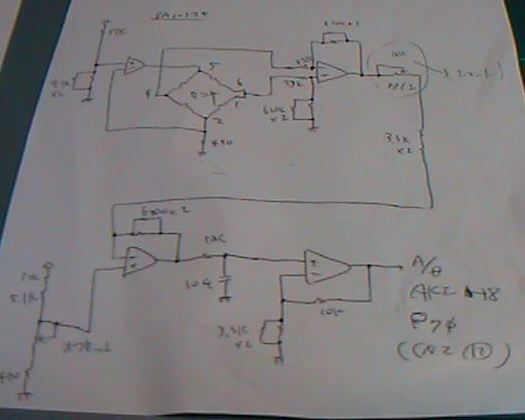

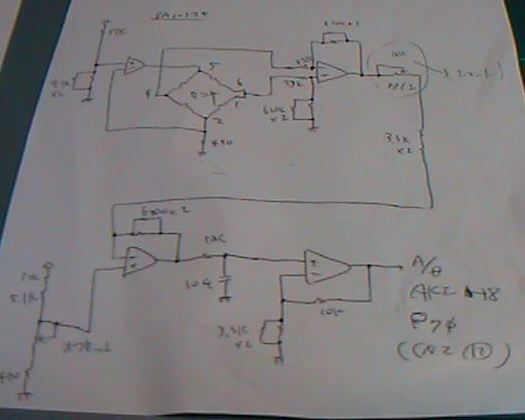

A/D, AKI-H8 and MIDI

This is not a report of engineering but a report of creation/design.

Fig.16 - Fig.22 shows the process of development of the sensing system

all by myself alone. For me, this is a part of my composition

like programming, image processing and traditional score-writing.

Fig.16 : air-pressure sensor module [fujikura]

Fig.17 : sensing circuit

Fig.18 : 32bit microprocessing card AKI-H8

(containing A/D, SIO, RAM, FlashROM, CTC, etc)

Fig.19 : A/D to MIDI circuit

Fig.20 : developing workbench

Fig.21 : CPU programming (assembler) in MSDOS

Fig.22 : MIDI output of breath sensor

5. Performance

The rehearsal is most important process in live computer music,

because so many parameters can be changed on stage in rehearsal.

The "fixed" music like CD, MD, DAT, DVD cannot be changed

in rehearsal, with only balance setting of PA. The sequencer style

is also difficult to change whole the music.

But it is easy for me to arrange/trim/change the algorithm and

perameters of the music in rehearsal time, and the music

changes drastically in short time.

Max control via MIDI

All live control is generated by Max in the performance

with receiving the MIDI output of Sho breath sensor.

The breath sensing information from Sho is real-time

pattern-matched and recognized into "performance

information", and they trigger some changes of sounds

and graphics, they controls continuously as parameters of signal

processing and color information in visual effect, etc.

Fig.23 - Fig.27 shows the original Max patches for "Visional Legend".

In the performance, I am at the computer desk and control the

main patch of Max in real time as another performer.

Fig.23 : main Max patch in [run mode]

Fig.24 : main Max patch in [edit mode]

Fig.25 : sub patch for Image/ine [run mode]

Fig.26 : sub patch for Image/ine [edit mode]

Fig.27 : universal time-variant subpatch

"chance" and "improvisation"

As you know when you read/study the score of "Visional Legend",

there is no "fixed" note or chord in the Sho performer's part.

The Sho part of this work may be played fully improvisation,

so the Sho performer must "listen, feel, create" the musical

images and play the Sho with bachground CD sound part

and live-generated graphics.

I request the "human supervision and control in music"

to the performer in this work, and construct the environment for real-time

composition and performance scientifically (computer music).

In my algorithmic composition, I usually use many "random"

objects in Max patch which control the music totally.

This means not that my music is random music or statistic

music, but that my music stands upon (traditionally) tonal

atmosphere or simple style/theory in music.

I never use the "random" object directly to generate musical

parameters, I always add the "musical filtering" algorithm

upon the randomness, it may be called "God in music world".

Of cource, this is just the same in traditional composition.

For example, the direct output of "random" object or sensor

MIDI information is an integer number (0-127), so it generates

12-tone chromatic scale or atonality when used as a note number

parameter of MIDI.

On the other hand, the DTM (sequencer) composer sets each

note data on the score, so the scale or tonality is fixed in each

scene in music.

But I usually use the "weighten table" algorithm in everywhere

in my composition. This table converts input integer data

into many kinds of musical parameters, but the probablity

or weight of the conversion may be changed easily in real-time.

So, the scale or tonality is flexible and changable with chance

or performance (sensor) in every moment in music.

"silence" as music

I use a special video projector for the performance of "Visional Legend".

Normal video projectors cannot be shut-down immediately, because

the lamp must be cool-down with the fan avoid heat-broken.

But in the final part of this work, only the Sho sound remains

in deeply silence, so I choose the special video projector which

may be shut-down in any time. Thus, you (also Tamami and myself)

can enjoy the perfect silence with the natural Sho sound (of course,

the PA is shut-down this moment).

This is different concept with John Cage, but I think that the silence

is rich music with Japanese traditional culture.

References

- Douglas Hofstadter, "Godel, Escher, Bach: An Eternal Golden Braid",

1980, Pulitzer Prize Winner

- Marvin Minsky, "The Society of Mind",

1988, Simon & Schuster

- Roger Penrose, "The Emperor's New Mind"

--- Concerning Computers, Minds, and the Laws of Physics ---,

1989, Oxford University Press

- Richard Moore, "Elements of Computer Music",

1990, PrenticeHall

- Roger Penrose, "Shadows of the Mind"

--- A Search for the Missing Science of Consciousness ---,

1995, Vintage Science

- Curtis Roads, "The Computer Music Tutorial",

1996, MIT Press

- Donald Gillies, "Artificial Intelligence and Scientific Method",

1996, Oxford University Press

- Curtis Roads, "Musical Signal Processing"

--- Studies on New Music Research, 2 ---,1997, Swets & Zeitlinger

- Charles Madden, "Fractals in Music"--- Introductory Mathematics for Musical Analysis ---,

1999, High Art Press

- Y. Nagashima, "Sensors for Interactive Music Performance" [ICMC2000 Workshop]

- Y. Nagashima, "Real-Time Interactive Performance with Computer Graphics and Computer Music"[7th

IFAC/IFIP/IFORS/IEA Symposium on Analysis, Design, and Evaluation of Man-Machina Systems], 1998

- Y. Nagashima, "BioSensorFusion:New Interfaces for Interactive Multimedia Art" [ICMC1998]

- Y. Nagashima, "'It's SHO time' --- An Interactive Environment for SHO(Sheng) Performance" [ICMC1999]

- Y. Nagashima, Sensor@ComputerMusic [IPSJ Tutorial with Atau Tanaka]

- Y. Nagashima, "Musical Concept and System Design of 'Chaotic Grains'": Sensor control for live chaotic music

[SIGMUS of IPSJ 1993]

- Y. Nagashima, "PEGASUS-2 : Real-Time Composing Environment with Chaotic Interaction Model": Integrated

computer music system containing sensor systems [ICMC1993]

- Y. Nagashima, "Chaotic Interaction Model for Compositional Structure": Sensor control for live chaotic music

[IAKTA workshop 1993]

- Y. Nagashima, "Multimedia Interactive Art : System Design and Artistic Concept of Real-Time Performance with Computer

Graphics and Computer Music": Integrated computer music system containing sensor systems, some applications

[HCI 1995]

- Y. Nagashima, "A Compositional Environment with Interaction and Intersection between Musical Model and Graphical Model

--- Listen to the Graphics, Watch the Music ---" Integrated computer music system with multimedia

[ICMC1995]

-

The symposium "Human Supervision and Control in Engineering and Music"

-

Yoichi Nagashima HomePage ( written in Japanese, sorry... )

-

Tamami Tono HomePage

-

Kyma

-

Max

-

Image/ine

-

SuperCollider