"IMPROVISESSION-II" :

A PERFORMING/COMPOSING SYSTEM FOR

IMPROVISATIONAL SESSIONS WITH NETWORKS

Yoichi Nagashima (SUAC/ASL)

1. Introduction

My research called PEGASUS project (Performing Environment of Granulation, Automata, Succession, and Unified-Synchronism) have produced many original systems and realized experimental performances. Many special sensors were also produced for better human-computer interactions. The important concept of this project is the idea of composition in computer music, called "algorithmic composition" or "real-time composition". This compositional method is applied to these systems with the idea of "chaos/statistics in music". Many agents are running that generate chaotic or statistic phrases individually in the original software. The message from the human performer changes the chaotic and statistic parameters, triggers and excites the dynamics of chaotic/statistic generators. The improvisation of the human performer is important in these systems and performances. [1-4]

2. Listen to the Graphics, Watch the Music

The next step of the research have produced a compositional environment with intersection and interaction between musical model and graphical model. The keyword of the project was "listen to the graphics, watch the music", and the purpose was constructing the composition/performance environment with multi-media (Fig 1). The system consists of some types of agents that are linked by object-oriented networks. The 'control' agent exists in the center of the system. This agent generates the 'control messages' both to the sound agency and the graphics agency in time domain, spatial domain, and structural domain. The input messages of this agency may be divided into four types: (1) traditional scenario of performers, (2) sensor information of the performance, (3) real-time sampled sounds as the material of sound synthesis, and (4) real-time recorded images as the material to generate graphics. The composer can describe the scenario as the algorithmic composition in a broad sense with both sound and graphics. The platforms were SGI workstations connected with networks. [5-9]

3. Improvisession-I

After these researches, I have produced the first experimental environment/system called "Improvisession-I". The system run with 24 SGI Indy computers through FDDI LAN, and softwares were developed with Open-GL, OSF/Motif, and RMCP (Remote Music Control Protocol) by C language. RMCP was produced by Dr. Masataka Goto [10], and the RMCP package is opened to the world on the web. The system seems like "drawing game" screen, and players can use mouse, keyboard and MIDI instruments (Fig 2). All players can play their own phrases, and can broadcast their phrases to other players with improvisation via RMCP. In the experimental lectures of students of music course of Kobe Yamate College, there were many interesting results in the theme of entertainment and education. For example, a certain student liked inviting a specific partner and playing in private like a chat rather than participating in the music session in all the members. Or probably because it became that the phrase which student performs in session of all the members tends to be missed, there was a tendency for all the members' volume to increase gradually.

Figure 2. The screen shot of "Improvisession-I".

4. GDS music

Arrangement of the problem about the research so far pointed out the following improving points. First, this software was interesting in musical education with some kinds of entertainment, but the required system is not popular with general environments in academic field. Secondly, the RMCP system required the "Time scheduling" methods which manages the synchronization with latency of networks, but both methods (RMCPtss [RMCP Time Synchronized Server] or NTP [Network Time Protocol]) seemed heavy for simple personal computers. And in order to absorb the possibility of the variation in delay by the network flexibly as the 3rd biggest subject, the necessity of reforming the concept of the music on condition of simultaneity itself has become clear.

Dr. Goto also advocated the concept of "the music which was late for the difference" in research called Remoto-GIG [10]. However, the concept is extended further more flexibly and it came to build an idea called GDSmusic (Global Delayed Session music) here. I made unnecessary time management which RMCP demanded, and the users by whom network connection was made enabled it to enjoy a music session in the framework of GDSmusic, without caring about the delay according to each. Of course, for that, the concept of the simultaneity which was the traditional foundations of a music session is thrown away, and the new view of playing in musical session with "1-2 measures past shadow of a performance of the partner" is needed. The idea of this GDSmusic was specifically first realized as the system "Improvisession-II" introduced in the following. However, the new entertainment of not only this but a network era, i.e., a possibility of saying that many and unspecified persons enjoy themselves through a network like a chat, is expected further.

5. Improvisession-II

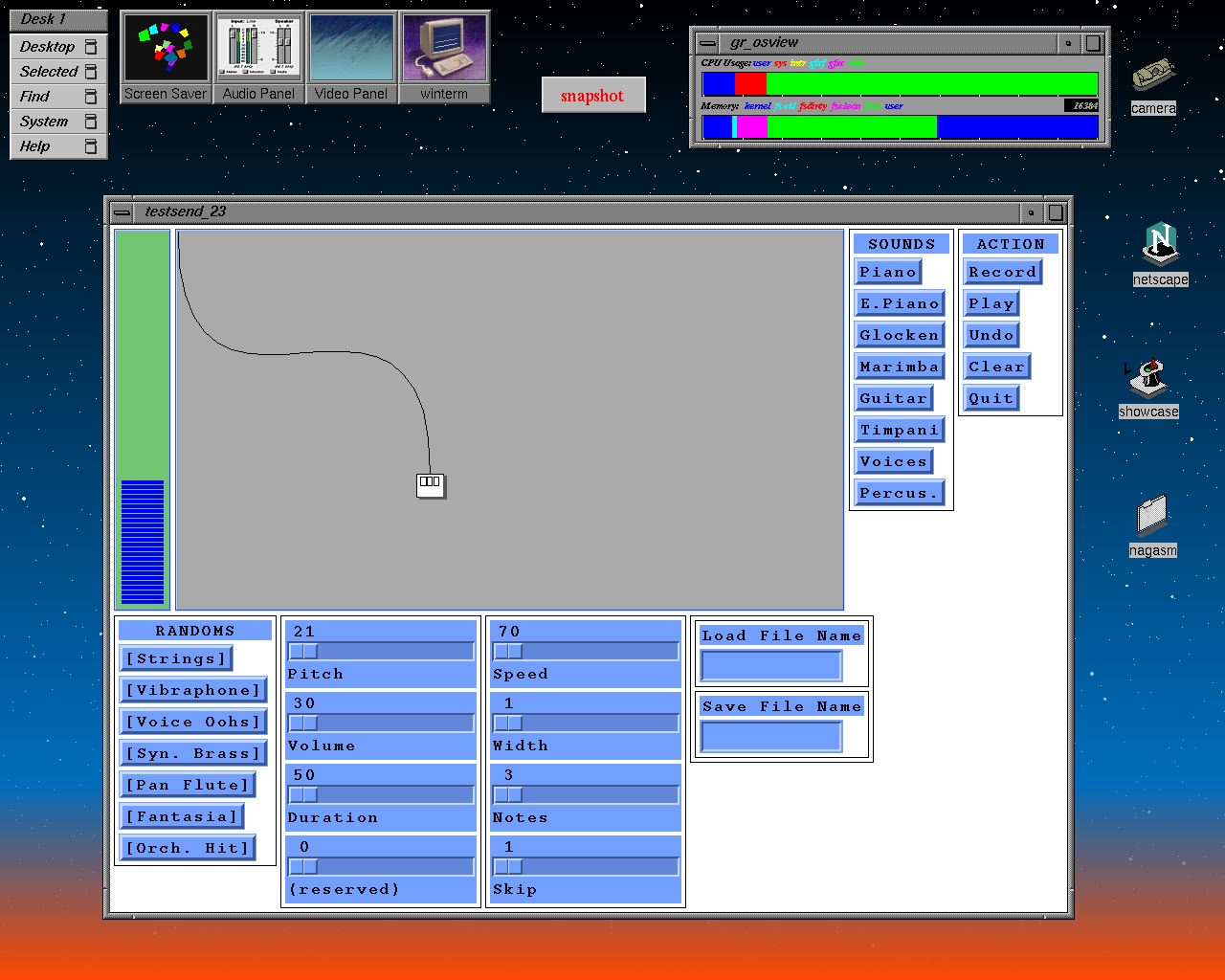

After these experiments and researches, I have newly produced the second experimental environment and system called "Improvisession-II". The platform have changed from SGI Indy computer to Macintosh computer connected with Ethernet (Fig 3).

Figure 3. The screen shot of "Improvisession-II".

This software is developed with Max4/MSP2 (by David Zicarelli) environment using OpenSoundControl ("otudp" objects) developed by CNMAT [11]. The "otudp" is a UDP object for PPC using Apple's OpenTransport networking system. All players can play their own phrases, can play with chaotic/statistic generators and can communicate with other performers with improvisation via UDP/IP. As a difference from RMCP, the mechanism in which each user's time is managed in this system is unnecessary. Moreover, it is necessary to transmit no music performance information to real time like RMCP, and since what is necessary is to exchange only the parameter of the algorithm music generation which runs autonomously within each personal computer, the traffic burden of a network is mitigated. The important thing of the concrete composition elements of this system is introduced to below, and it considers as the help of an understanding of the new concept of GDSmusic.

5.1. System Timing and BGM Generator

A master clock is divided into 32 basic timing clocks (Fig 4). Although this value is shared by all participants, it is possible to change all at once as "tempo information" in a common server. The drum generator block chooses the percussion instrument which corresponds for every 32 beat of this among the parts of BGM (figure omitted). Fig 5 is the part of a code generation block, corresponding to 32 beats, is chosen from the pattern of many code phrases statistically, and is performed.

Figure 4. Subpatch of system timing generator block.

Figure 5. Subpatch of chord generator block.

5.2. Bass Generator and Melody Generator

In a base generator block (Fig 6), the pattern of many scale phrases permitted corresponding to the code is prepared as a table (Fig 7), it chooses statistically, and a performance is generated. In a Melody phrase generator block, players can perform not only by using musical keyboard graphically but also by controlling parameters which manage the selecting probability (Fig 3) of each note in the musical scales (Fig 8).

Figure 6. Subpatch of bass generator block.

Figure 7. Bass phrase generating tables

Figure 8. Subpatch of melody phrase generator block.

5.3. Synchronizing Management

In a transmitter block (Fig 9), selected BGM parameters and information of played melody phrases are packed for OpenSoundControl message and transmitted at the moment of the end point of the looping. As an important point, this transmission is free according to the timing of performance loop, without caring about the synchronization with other players who has participated in the network session performance.

Figure 9. Subpatch of message transmitter block.

The receiver block (Fig 10) is the important point of the synchronization mechanism in GDSmusic. At first, the incoming OpenSoundControl message is latched at the moment of Ethernet communication. Secondly these latched information is latched again at the moment of the end point of the looping of receiver's performance. Finally these latched information is triggered with internal timing clock pulses to realize the ensemble with its internal musical performance.

Figure 10. Subpatch of message receiver block.

Consequently, the fellow participants who has not taken the synchronization of timing to each other can enjoy a music session with a performance of other participants who were late and arrived, forming each performance.

6. Summary

I described two types of performing and composing systems for improvisational sessions with networks and a concept called "GDS (Global Delayed Session) music". I will develop new sensors for this system as new human-computer interfaces and research new possibility in network entertainment in music and multimedia.

References

[1] Y.Nagashima. Real-Time Control System for "Pseudo" Granulation, Proceedings of 1992 ICMC, International Computer Music Association, 1992

[2] Y.Nagashima. PEGASUS-2 : Real-Time Composing Environment with Chaotic Interaction Model, Proceedings of 1993 ICMC, International Computer Music Association, 1993

[3] Y.Nagashima. Chaotic Interaction Model for Compositional Structure, Proceedings of IAKTA / LIST International Workshop on Knowledge Technology in the Arts, International Association for Knowledge Technology in the Arts, 1993

[4] Y.Nagashima. Multimedia Interactive Art : System Design and Artistic Concept of Real-Time Performance with Computer Graphics and Computer Music, Proceedings of Sixth International Conference on Human-Computer, Interaction, ELSEVIER, 1995

[5] Y.Nagashima et al. A Compositional Environment with Interaction and Intersection between Musical Model and Graphical Model -- "Listen to the Graphics, Watch the Music" --, Proceedings of 1995 ICMC, International Computer Music Association, 1995

[6] Y.Nagashima. Real-Time Interactive Performance with Computer Graphics and Computer Music, Proceedings of the 7th IFAC/IFIP/IFORS/IEA Symposium on Analysis, Design, and Evaluation of Man-Machina Systems, International Federation of Automatic Control, 1998

[7] Y.Nagashima. BioSensorFusion : New Interfaces for Interactive Multimedia Art, Proceedings of 1998 ICMC, International Computer Music Association, 1998

[8] Y.Nagashima et al. "It's SHO time" -- An Interactive Environment for SHO(Sheng) Performance, Proceedings of 1999 ICMC, International Computer Music Association, 1999

[9] Y.Nagashima. Composition of "Visional Legend", Proceedings of International Workshop on "Human Supervision and Control in Engineering and Music", 2001

[10] M.Goto et al. RMCP : Remote Music Control Protocol -- Design and Applications --, Proceedings of 1997 ICMC, International Computer Music Association, 1997

[11] W.Matthew et al. OpenSound Control : A New Protocol for Communicating with Sound Synthesizers, Proceedings of 1997 ICMC, International Computer Music Association, 1997

|