Interactive Media Art with Biological Interfaces

Yoichi Nagashima

Shizuoka University of Art and Culture / Art & Science Laboratorynagasm@computer.org

1. Introduction

As the research called PEGASUS project (Performing Environment of Granulation, Automata, Succession, and Unified-Synchronism), I have produced many systems of real-time performance with original sensors, and have composed and performed many experimental works at concerts and festivals. The second step of the project is aimed "multimedia interactive art" by the collaboration with CG artists, dancers and poets. The third step of the project is aimed "biological or physiological interaction between human and system". I had produced (1) Heart-beat sensor by optical information at human earlobe, (2) Electrostatic touch sensor with metal contacts, (3) single/dual channel electromyogram sensor with direct muscle noise signals. And now I report the newer sensors in this paper.2. MiniBioMuse-III

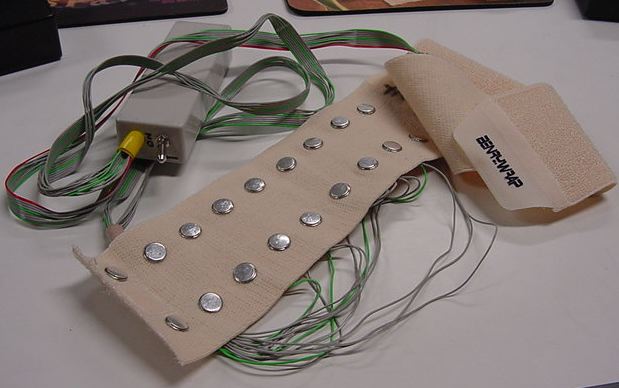

At first I report the development of a compact/light 16-channels electromyogram sensor called "MiniBioMuse-III" (below). This sensor is developed as the third generation of my research in electromyogram sensing, because there are many problems in high-gain sensing and noise reduction on stage (bad condition for bio-sensing).

"MiniBioMuse-III" (only for one arm)

2-1. Development of "MiniBioMuse-III"

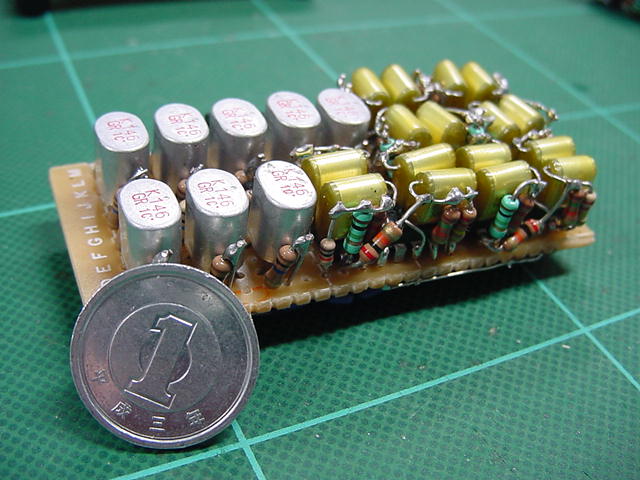

The front-end sensing circuit (below) is designed with heat-combined dual-FETs, and cancels the common-mode noises. There are 9 contacts on one belt, one is common "ground".

Front-end circuits of "MiniBioMuse-III"

Each 8-channel electromyogram signals for one arm/hand (below) is demultiplexed and converted to digital information by 32bits CPU, and converted to MIDI information for the system (below). This system also generates 2 channel Analog voltage outputs for general purpose from MIDI inputs.

High-density assembled front-end circuits (8ch)

32bits CPU circuits of "MiniBioMuse-III"

This CPU also works as software DSP to suppress the Ham noise of environmental AC power supply. This is its algorythm of "Notch Filter" for noise reduction.

Noise reduction algorythm of CPU

2-2. Output of "MiniBioMuse-III"

These are the output analog signals of front-end circuits of "MiniBioMuse-III".

Sensing output signals of "relax"

Sensing output signals of "hard tension"

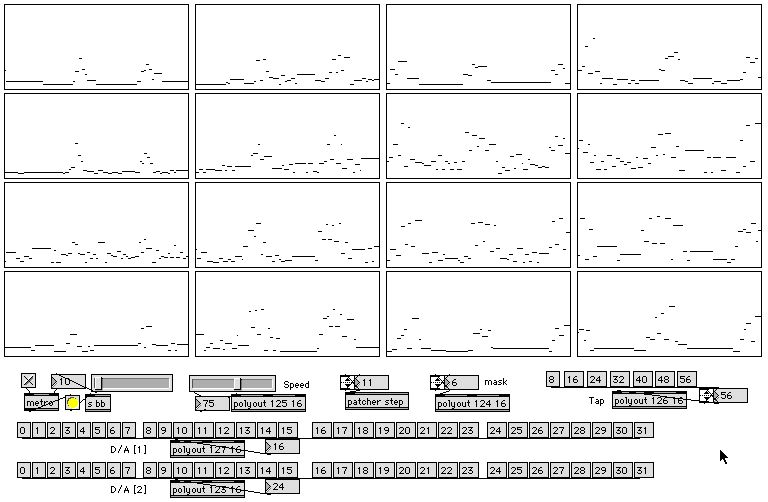

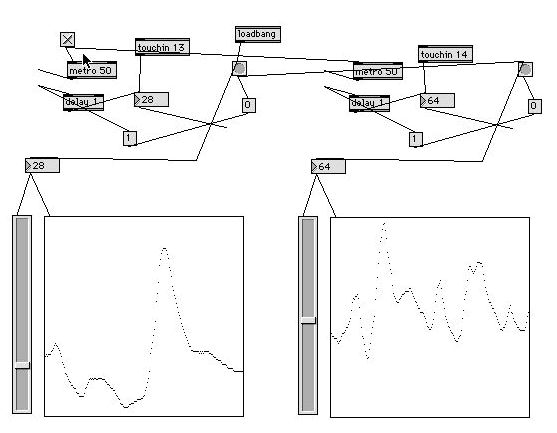

This is the Max/MSP screen of the MIDI output of this sensor. 8channels + 8channels electromyogram signals are all displayed in real time, and used for sound generating parameters. The maximun sampling rate of this sensor is about 5msec, but this sampling rate is changed by the host system for its ability of MIDI receiving.

MIDI output of "MiniBiouse-III" (Max/MSP)

2-3. Performance with "MiniBioMuse-III"

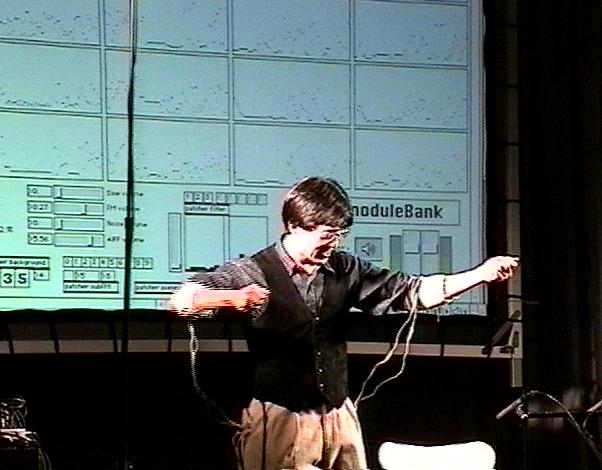

I have composed one new work with this "MiniBioMuse-III" for my Europe Tour in September 2001. The title of the work is "BioCosmicStorm-II", and the system is constructed in Max/MSP environments. 16 channel electromyogram signals are all displayed in Max/MSP screen and projected on stage, so audience can easily understand the relations between sound and performance. This work was performed in Paris (CCMIX), Kassel and Hamburg. Generated sounds are three types in scenes : (1) 8+8 channels bandpass-filters with white noises, (2) 16 individual-pitch sine-wave genarators, and (3) 3+3 operators and 10 parameters of FM synthesis generators. All sounds are real-time generated with the sensor.

Performance of "BioCosmicStorm-II"

2-4. Parameters Mapping in Composition

Below shows the main patch of Max/MSP for the work "BioCosmicStorm-II" in running mode. There are 16 real-time display windows of 16 channel EMG inputs. Below shows the same patch in editing mode. The 16 sensor outputs were mapped into "sinusoidal synthesis" part of this work is programmed in this main patch using the Hide-mode objects.

Main Patch of "BioCosmicStorm-II"

Edit Mode of Main Patch

This shows the sub-patch of the work for the "FM synthesis" part. This FM algorithm is designed by Suguru Goto and distributed by himself in DSPSS2000 in Japan. The multiband filters were also used in this work.

SubPatch for FM Synthesis Block

3. "Breathing Media"

In computer music performance, the human performer generates many information which computer system can detect, but "sound" and "image" of performance have fatal problems of its delay. The final sound of the performance and image of the movements of performer are detected just after its generation, and the system has limited conversion time and limited computation time, so the performer feels the delay of response in every time. Thus I have developed two types of new sensors with which computer system can detect the actions before by sound or by image of the performance.3.1. Vocal Breath Sensor

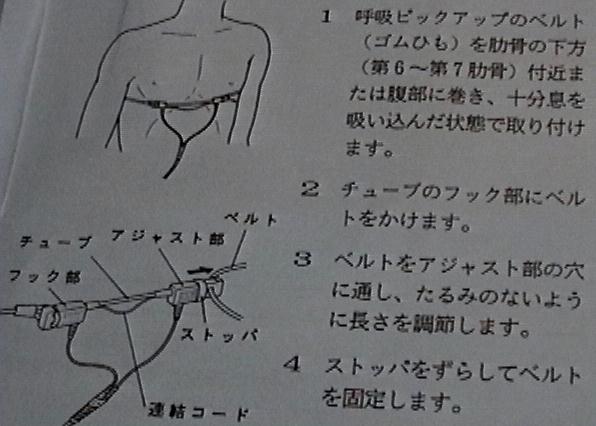

Vocal performer acts with heavy breathing, and her(his) breast and belly repeats expansion and contraction. So I used rubber tube sensor (below) which changes its resistance with the tension, and produced the Vocal Breath Sensor system to convert the breathing information to MIDI in real-time (below).

Rubber Tube Sensor

"Vocal Breath Sensor" System

The sensing information (below) is used to change signal processing parameters of her voices and to arrange parameters of real-time computer graphics on stage. The audience can listen to her voice and watch her behavior with the tight/exaggerated relation effected and generated by the system which detects the changes before the sound.

Output of "Vocal Breath Sensor"

3.2. SHO Breath Sensor

I report the development of a compact/light bi-directional breath pressure sensor for SHO (Fig.17). SHO is the Japanese traditional musical instrument, a mouth organ. The SHO player blows into a hole in the mouthpiece, which sends the air through bamboo tubes which are similar in design and produce a timbre similar to the pipes in a western organ. The bi-directional breath pressure is measured by an air-pressure sensor module, converted to digital information by 32bits CPU, and converted to MIDI information.

SHO Breath Sensor

The authoring/performing system displays the breathing information in real-time, and helps the performer for delicate control and effective setting of the parameters (below). The output of this sensor shows not only (1) the air-pressure inside the SHO, and (2) the volume of SHO sound of course, but also (3) preliminary preparation operation and mental attitude of the performer, so it is very important for the system to detect this information before sound starts.

Example of SHO Breath Data

3-3. Performances with SHO Breath Sensor

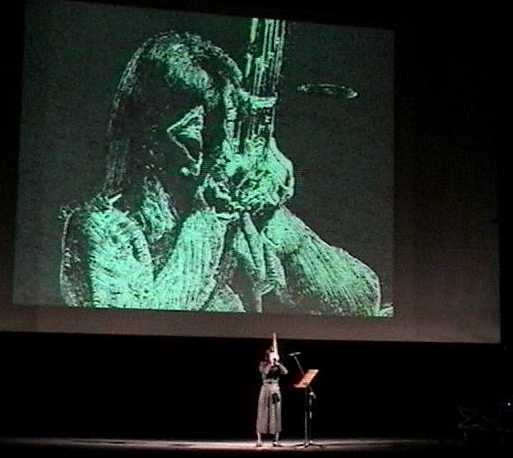

Performance of "Visional Legend" (Kassel)

This bi-directional SHO breath sensor is originally produced by myself, and used by Japanese SHO performer/composer Tamami Tono Ito. The "Breathing Media" project is her own. I have composed interactive multimedia art called "Visional Legend" which was performed in Kassel (2 concerts) and Hamburg in September 2001 (above). She composed some works using this sensor, and Figure 20 shows the work "I/O". Her breath controls both sound synthesis and live graphics.

Performance of "I/O" (SUAC Japan)

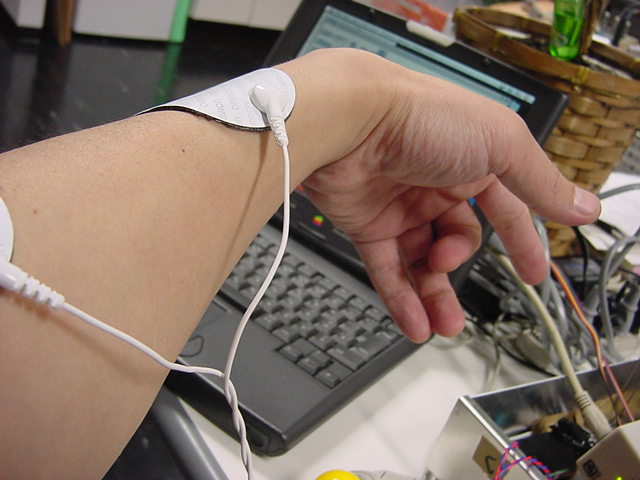

4. Bio-Feedback System

Finally, I report the newest development of a compact and light 8-channel biological feedback system (below). The feedback signal is high voltage (10V-100V) electric pulses like "low frequency massage" device (below)). The waveshape, voltage and density of pulses are real-time controlled with MIDI from the system. The purposes of this feedback are: (1) detecting performer's cues from the system without being understood by audience, (2) delicate control of sounds and graphics with the feedback feeling in virtual environment, (3) live performance of outside of anticipation with the electric trigger.

Bio-Feedback System

Example of Bio-Feedback signal

Bio-Feedback contacts

4.1. Application Example (1)

This is the performance of the work "It was going better If I would be sadist truly." composed and performed by Ken Furudachi in February 2002 in Japan. There were 2 DJ (scratching discs) performers on stage, and the DJ sounds generates many types of bio-feedback signals with Max/MSP and this system. The performer shows the relation between input sounds and output performance just by his body itself. This work is the first application of the system.

Performance of "It was going better If I would be sadist truly."

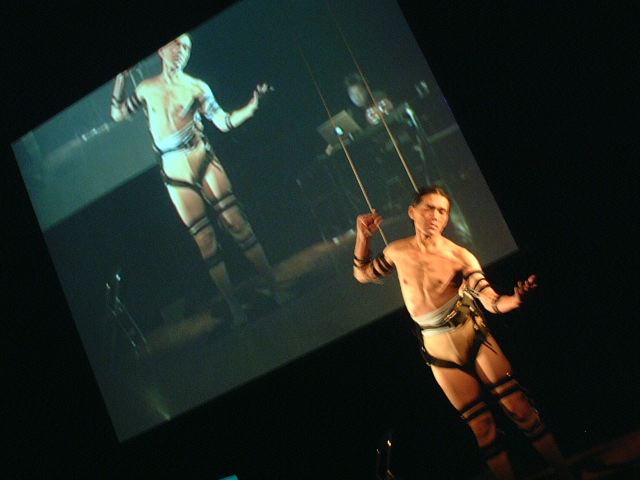

4.2. Application Example (2)

This is the performance of the work called "Flesh Protocol" composed by Masayuki Akamatsu and performed by Masayuki Sumi in February 2002 in Japan. The performer is a professional dancer, so he can receive two times bigger electronic pulses with his strong and well-trained body. The composer produces many noises and sounds with Max/MSP, and the converted signals control the body of the performer on stage. The relations of them are well shown in real-time with the screen and motions on stage.

Performance of "Flesh Protocol"

4.3. Application Example (3)

This is the performance of the work called "Ryusei Raihai" composed by Masahiro Miwa in March 2002 in Japan. The four performers connected to the system are "instruments" of the special message in Internet with the composer's filtering program. When one special data occurs in the network, one of the performers is triggered by the system, then he/she plays bell on the hand in real-time.

Performance of "Ryusei Raihai"

4.4. Possibility of "Hearing pulse"

I want to discuss about a possibility of "hearing pulse" without using ears. In experiments during development of this system, I found many interesting experiences to detect "sounds" without acoustic method (speaker, etc). The numbed ache from this Bio-feedback system is different with the waveshape, frequency etc. This shows the possibility for hearing-impaired person that a sound can be perceived without using an ear. Another experiment, the sourse is changed from simple pulses to musical signals also shows the possibility of listening to the music with this feedback.4.5. Combined force display system

It is well known that EMG sensor is a good instrument, but there is a weakpoint compared with other "mechanical" instruments. EMG sensor does not have the "physical reaction of performance" like guitar, piano, etc. To resolve this, one musician generates very big sound as the body-sonic feedback of the performance. I have just started researching the combination of EMG sensor and bio-feedback system with the same electrode using time-sharing technique. Figure 27 shows the block diagram for the combination. Card-size controller "AKI-H8" contains 32bits-CPU, A/D, D/A, SIO, RAM, FlashEEPROM and many ports. Electrode channels (max:8ch) are multiplexed for A/D and D/A. "High impedance of separation, high input voltage" analog switch is controlled by the CPU ports synchronizing with the system.

Blockdiagram of "Combined System"

5. Conclusions

Some researches and experimental applications of human-computer interaction in multi-media performing arts were reported. Interactive multi-media art is the interesting laboratory of human interfaces and perception/cognition researches. So I will continue these researches with many experiments.6. References

[1] Nagashima.Y., PEGASUS-2 : Real-Time Composing Environment with Chaotic Interaction Model, Proceedings of ICMC1993, (ICMA, 1993)

[2] Nagashima.Y., Multimedia Interactive Art : System Design and Artistic Concept of Real-Time Performance with Computer Graphics and Computer Music, Proceedings of Sixth International Conference on Human-Computer Interaction, (ELSEVIER, 1995)

[3] Nagashima.Y., A Compositional Environment with Interaction and Intersection between Musical Model and Graphical Model -- Listen to the Graphics, Watch the Music --, Proceedings of ICMC1995, (ICMA, 1995)

[4] Nagashima.Y., Real-Time Interactive Performance with Computer Graphics and Computer Music, Proceedings of 7th IFAC/IFIP/IFORS/IEA Symposium on Analysis, Design, and Evaluation of Man-Machina Systems, (IFAC, 1998)

[5] Nagashima.Y., BioSensorFusion: New Interfaces for Interactive Multimedia Art, Proceedings of ICMC1998, (ICMA, 1998)

[6] Nagashima.Y., 'It's SHO time' -- An Interactive Environment for SHO(Sheng) Performance, Proceedings of ICMC1999, (ICMA, 1999)

[7] Composition of "Visional Legend" - http://nagasm.org/ASL/kassel/index.html

[8] Bahn, C., T. Hahn, and D. Truema, Physicality and Feedback: A Focus on the Body in the Performance of Electronic Music, Proceedings of ICMC2001, (ICMA, 2001)

[9] Boulanger, R., and M. V. Mathews, The 1997 Mathews' Radio Baton and Improvisation Modes, Proceedings of ICMC1997, (ICMA, 1997)

[10] Camurri, A. , Interactive Dance/Music Systems, Proceedings of ICMC1995, (ICMA, 1995)

[11] Chadabe J., The Limitations of Mapping as a Structural Descriptive in Electronic Instruments, Proceedings of NIME2002

[12] Chafe, C., Tactile Audio Feedback, Proceedings of ICMC1993, (ICMA, 1993)

[13] Jacob, R.J.K., Human-Computer Interaction: Input Devices, ACM Computing Surveys 28(1) , 1996

[14] Jorda, S., Improvising with Computers: A Personal Survey (1989-2001), Proceedings of ICMC2001, (ICMA, 2001)

[15] Kanamori, T., H. Katayose, S. Simura, and S. Inokuchi., Gesture Sensor in Virtual Performer, Proceedings of ICMC1993, (ICMA, 1993)

[16] Kanamori, T., H. Katayose, Y. Aono, S. Inokuchi, and T. Sakaguchi, Sensor Integration for Interactive Digital Art, Proceedings of ICMC1995, (ICMA, 1995)

[17] Katayose, H., T. Kanamori, K. Kamei, Y. Nagashima, K. Sato, S. Inokuchi, and S. Simura, Virtual Performer, Proceedings of ICMC1993, (ICMA, 1993)

[18] Knapp, R. B., and H. S. Lusted, A Bioelectric Controller for Computer Music Applications, Computer Music Journal 14(1), 1990

[19] Roads, C., The Computer Music Tutorial. Cambridge, MA: The MIT Press, 1996

[20] Rowe, R., Interactive Music Systems - Machine Listening and Composing. Cambridge, Mass.: The MIT Press, 1993

[21] Tanaka, A., Musical Technical Issues in Using Interactive Instrument Technology with Application to the Biomuse, Proceedings of ICMC1993, (ICMA, 1993)