Conference ��Musical Interfaces and Robotics��

Conference

��Musical Interfaces and Robotics��

Date: November 27, 2017, 13:00-

Place: Conference Room 1 (1st floor), Tokyo University of the Arts, Department of Musical Creativity and the Environment��Address: 1 Chome-25-1 Senju, Adachi, Tokyo 120-0034, Japan��

Telephone: +81 50-5525-2742��

http://www.geidai.ac.jp/access/senju��

Email: mce-office@ml.geidai.ac.jp

��

Participants:

Fr辿d辿ric Bevilacqua (IRCAM)

Jean-Marc Pelletier (Nagoya Zokei University)

Kenjiro Matsuo (Invisible Designs Lab)

Shigeyuki Hirai (Kyoto Sangyo University)

Suguru Goto (Tokyo University of the Arts)

Yoichi Nagashima (Shizuoka University of Art and Culture)

(Alphabetical order)

Theme:

The conference intends to present the research and the latest development of musical interfaces and robots for musical instruments. The musical interfaces refer to the development of interactive systems for sound and music, which are based upon sensors and others in order to capture gesture and movement in real time. These also relate with Programming, such as Mapping Interfaces and Gesture Followers, as well as some applications of Media Arts. The robots for musical instruments mean automated instruments, which are performed by motors and controlled by a computer. This conference much tends to present in non-academic style and to discuss about the latest issue and the future perspective.

Descriptions:

Fr辿d辿ric Bevilacqua

��Designing Sound Movement Interaction: Models, Mapping and Interfaces��

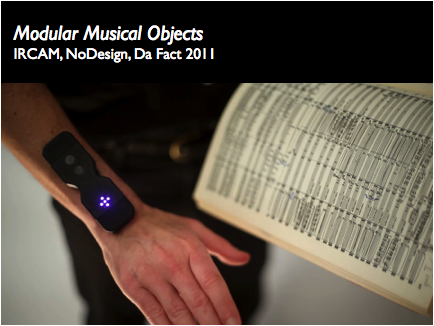

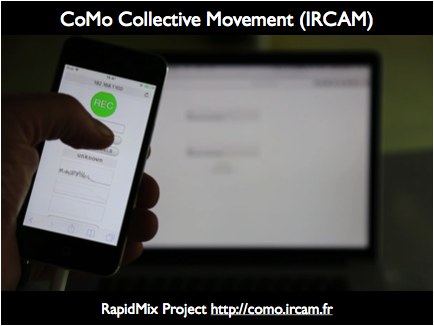

I will present the research performed by the Sound Music Movement Interaction team at IRCAM in Paris. Our research and applications concern the development of interactive systems for sound and music, and more specifically systems allowing for gesture and movement interaction. Various tangible interfaces, such as the Modular Musical Objects have been developed, along with methods to map movement to sound. In particular, we make use of interactive machine learning techniques for continuous motion recognition and mapping such as Gesture Follower, or more recently the XMM library (by Jules Fran巽oise). Recently, we have also experimented with systems enabling collective interaction using smartphones (CoSiMa and Rapid-Mix projects). Many applications will be presented, mainly related to music and performing arts.

Links

http://frederic-bevilacqua.net/

Videos

Modular Musical Objects

Urban Musical Game

Mapping by Demonstration

Biography

Fr辿d辿ric Bevilacqua is the head of the Sound Music Movement Interaction team at IRCAM in Paris (part of the joint research lab Science & Technology for Music and Sound �� IRCAM �� CNRS �� Universit辿 Pierre et Marie Curie). His research concerns the modeling and the design of interaction between movement and sound, and the development of gesture-based interactive systems. He holds a master degree in physics and a Ph.D. in Biomedical Optics from EPFL (Switzerland). In From 1999 to 2003 he was a researcher at the University of California Irvine. In 2003 he joined IRCAM as a researcher on gesture analysis for music and performing arts. He was keynote or invited speaker at several international conferences such as the ACM TEI��13. As the coordinator of the ��Interlude project��, he was awarded in 2011 the 1st Prize of the Guthman Musical Instrument Competition (Georgia Tech) and received the award ��prix ANR du Num辿rique�� from the French National Research Agency (category Societal Impact, 2013).

Jean-Marc Pelletier

Title: Audio-visual Congruence Effects and Music Design

Summary: Certain associations of sounds and visuals appear to many as more ��natural�� than others. From these interactions, we can isolate a number of principles to guide the design of musical tools and performances.

Bio: Assistant professor at Nagoya Zokei University. Based in Japan since 1999, my research interests span from interfaces for musical expression to game design.

Link: http://jmpelletier.com

Kenjiro Matsuo

CEO of Sound Art Unit invisible designs lab a.k.a dir

��New point of view for music and sound��

��Expand Music�� is the concept of them. Presentation will be mainly about ��Z-machines project��. How did them work for playing musical instruments etc.

https://www.youtube.com/watch?v=VkUq4sO4LQM

https://wired.jp/2014/04/19/squarepusher-robot-music/

Shigeyuki Hirai

Title:

Interactive Sound and Music Systems for Everyday Life

Abstract:

In this talk, the research project for the future of a smart house with various sensors and displays in Kyoto Sangyo University will be presented. The features of the research are functions of interactive Sinification and/or playable environments as musical instruments in everyday life. Especially, this project deals with practical and useful systems developed for Japanese bathroom. For instance, Bathonify which is an interactive sonification system representing a bather��s state with motions or vital signs in a bathtub, TubTouch and RapTapBath those have embedded sensors inside of a bathtub edge to work as a controller with various sound and music applications, and so on. Through this presentation, the possibilities and issues of facilities with incorporating interactive sound and music for everyday life are discussed.

Biography:

Shigeyuki Hirai is an Associate Professor in Faculty of Computer Science and Engineering at Kyoto Sangyo University. Prior to the present affiliation, he was a system engineer at OGIS-RI Co., Ltd. and a researcher at KRI Inc. He received Ph.D. degrees in engineering from Osaka University in 2002. His research interests are HCI, entertainment computing and music computing. For these fifteen years, he has worked in interactive systems for everyday life, especially research and development of smart house for Japanese life style with sound stuff. He is the member of ACM, IPSJ, IEICE, Human Interface Society and The Society for Art and Science.

http://www.cc.kyoto-su.ac.jp/~hirai/index-e.html

- http://ubiqmedia.cse.kyoto-su.ac.jp/?page_id=68

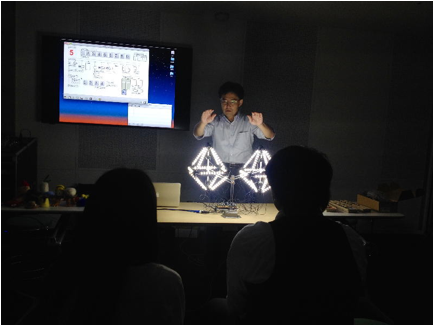

Suguru Goto

��My robot works for music and my sensor-based performance ��

A public: 束 why are you making a robot for music? 損

Me: 束 I want to go beyond the limit that an human player can do. 損

A public: 束 Yeah, sure. The robot can eternally play any music without any complains and without any fees, hum? (Sniff) 損

Me: 束 Having gone beyond the limit of human, I would like to be able to listen to music that I have never listened. 損

A public: 束 Well, you know. A robot does not have any emotion and any feeling. 損

Me: 束 The emotion and the feeling belong to music itself. A robot may possess intelligence to perform music with expression. Human thinks that they are too important to accept these, thus they do not share the emotion of music, when it is performed by non-human 損.

A public: 束 You seem to have watched too many Scientific Fiction movies. 損

Me: 束 HAL is not the story in future. It has been already realized. 損

A public: 束 I��d prefer to go to a concert of warm and tasty music by human. 損

Me: 束 What is the warm and tasty music? As I think more about music, I do not know even what is really the definition of music. 損

A public: 束 Well, I do not know neither. But don��t you want to listen to good music? 損

Me: 束 Certainly. But it is not a matter who plays and composes it. 損

A public: 束 So that a robot is no longer a servant for human? That sounds great. (Sniff) 損

Me: 束 In fact, as I work more robots, I appreciate more the complexities of human. However, I have never thought that a robot is a replacement of human. Human is too much used to the customs and traditions. I rather expect that a robot can do something different from human. That is to say a new art form.��

Suguru Goto is a media artist, an inventor and a performer and he is considered one of the most innovative and the mouthpiece of a new generation of Japanese artists.

Yoichi Nagashima

Bio-Sensing and Bio-Feedback Interfaces

The brief summery of presentation

I will report (with short demonstration performance) about new instruments applied by biological information sensing, biofeedback and multi-channel tactile sensors. Recently I developed three projects - (1) a new EMG sensor "Myo�� customized to be used as double sensors, (2) a new brain sensor ��Muse�� customized to be used by OSC, and (3) an originally developed ��MRTI (Multi Rubbing Tactile Instrument)�� with 10 tactile sensors. The key concept is BioFeedback which has been receiving attention about the relation with emotion and interception in neuroscience recently.

The commercialized sensors ��Myo�� and ��Muse�� are useful for regular consumers. However, we cannot use them as new interfaces for musical expression because they have a number of problems and limitations. I have analyzed them, and developed them for interactive music. The ��DoubleMyo�� is developed with an original tool in order to use two ��Myo�� at the same time, in order to inhibit the ��sleep mode�� for live performance on stage, and in order to communicate via OSC. The ��MuseOSC�� is developed with an original tool in order to receive four channels of the brain-wave, and 3-D vectors of the head.

Even though not dealing the direct bio-signals, the tactile sensor is very interesting / important for human sensing. The ��RT corporation�� in Japan released the ��PAW sensor�� which is a small PCB (size 21.5mm * 25.0mm, weight 1.5g) with a large cylinder of urethane foam on it. The output information of this sensor is four channel voltages which is time-shared conversion, which means the nuances of rubbing / touching the urethane foam by fingers. Then, I developed a system to use 10 ��PAW sensors�� with egg-shaped plastic container for ten fingers. The performer can control ten fingers by rubbing tactile action, and a total of 16 parameters from the PAW sensors are realtime mapped - to generate four voices by formant synthesis algorithm and - to generate realtime Open-GL graphics by fractal algorithm.

Biography

Yoichi Nagashima, composer/researcher/PE, was born in 1958 in Japan. He learned and played some instruments: violin, recorder, guitar, keyboards, electric bass, drums, and vocal/choral music. He was the conductor of Kyoto University Choir and composed over 100 choral music, and studied nuclear physics there. As the engineer of Kawai Musical Instruments, he developed some sound generator LSIs, and designed some electronic musical instruments, and produced musical softwares. From 1991, He has been the director of ��Art & Science Laboratory�� in Japan Hamamatsu, produces many interactive tools of real-time music performance with sensor/MIDI, cooperates some researchers and composers, and composes experimentally pieces. He is also a key-member of Japanese computer music community. From 2000, he has been also the associate professor at SUAC(Shizuoka University of Art and Culture), Faculty of Design, Department of Art and Science, and teaches multi-media, computer music and media-art. As a composer of computer music, he collaborates many musicians in his composition: Piano, Organ, Percussion, Vocal, Flute, Sho, Koto, Shakuhachi, Dance, etc. In 2004, he organized and was the General Chair of NIME04, and he became the associate professor of master course of SUAC. He became the professor on April 2007. He supported over 120 works and projects of interactive/multimedia installations from 2000, composed/performed many works of computer music, and organized/performed many lectures/workshops in many places all over the world.

http://nagasm.org/ASL/TUA2017/index.html